SEO Funnel Breakdowns

Sieh, wie echte Brands mit SEO Millionen umsetzen – jetzt Breakdowns sichern!

Jetzt Videos anfordern!

⚠️ Wichtig: Die Bestätigung zum Audit erhältst du per Telefon

Let Google work for you, because visitors become customers.

*free of charge & without obligation

"An agency that can do everything can't do anything really well."

- This realization led us to clearly specialize in SEO.

Our focus as an SEO agency quickly evolved into a specialization in e-commerce. This specialization resulted from our tried-and-tested 3-phase concept, which has been bringing online stores in competitive niches to top positions in a predictable manner for several years now.

The memorably confrontational name "WOLF OF SEO" stands for our commitment to our clients to win the battle for the top positions clearly - and with all permitted means.

At WOLF OF SEO, we work in a purely data-based, methodical way and have geared every process towards scaling online stores. For this reason, we also work completely performance-based for online stores with suitable SEO potential - so you get exactly what you pay for.

*free of charge & without obligation

WOLF OF SEO is known from:

Agencies can talk a lot when the day is long. We know that at the end of the day, it's not the coffee, the small talk or the handicap that counts for your company - it's the results. Our wolves will eat your current SEO agency for breakfast.

Arrange a free and non-binding initial consultation now.

Strategic lead

Consult 300+ companies in SEO, trained 100+ people in operational SEO, entrepreneur, process expert. Builds your external SEO department.

Operational lead

Operationally managed 100+ projects, Onpage & Offpage SEO experience from 200+ projects, trains your staff as if they were ours.

Content expert

1,000+ SEO texts implemented, 100+ copywriters trained, types 100 words per minute. Ensures that your texts rank on Google.

Link building expert

5,000+ backlinks built, 700+ websites & blogs in network, 50+ magazines in network. Makes your brand look dazzling.

We know how the hare runs - really. Our experience is based purely on practice.

Our methods are up to date, based on the highly competitive American market and very different from the slow, stiff German SEO standard.

Our 3-phase SEO process is the result of more than 300 projects. The process is applicable to any industry and niche and provides the golden framework for the SEO development of your project. We retain full flexibility to adapt to your initial situation and market environment - yet the course remains in the direction of the 3-phases.

We bring in our specialized SEO department. Whether a strategic lead, operational lead, copywriter, link building manager or pure operational manpower, we bring it all to your company. The monthly cost will be less than hiring a single SEO manager.

We are 100 % specialized in e-commerce SEO. Every one of our processes is optimized for this playing field. It's all about results, progress and, of course, your sales. We work strictly according to the 80/20 rule and know which levers really make a difference. We only work on results and don't waste time (i.e. your budget).

We think with you and understand your business, your monetization and your goals. Our processes are 100 % digitized and remote compatible. We train your team in all operational activities and processes, promote knowledge transfer to your team, take the lead and have your back. You are an entrepreneur and have enough other fires to put out.

Be open to going the way of the wolves instead of the sheep. We are strongly oriented towards the international market. As in most marketing disciplines, the German SEO market likes to lag behind the international market by a few years. We like to leave the right lane to your competitors. In doing so, we will regularly challenge your beliefs on how SEO works.

SEO is not performance marketing. Typically, SEO is the second or third marketing channel that an e-commerce project builds. As with almost all sustainable things, the investment is only recovered over time. Here, an ongoing marketing channel such as performance marketing should easily cover your investment in sustainable SEO.

Good output needs good input. Each of our processes is designed to take as little time and resources as possible from you and your team. However, we still need regular approvals, briefings and need to understand your brand. This requires about 1-2 hours of your team's time and leisure per week.

The elephant in the room. Our concept is aimed at companies that are willing to invest at least 2,000€/month in SEO.

With a smaller budget, our progress is more like the pace of a tortoise than a wolf.

SEO is a long-term revenue channel. We value long-term partnerships and want to scale your business with you.

We want to celebrate successes and milestones together with you. Let's build a great marketing channel for your brand together.

*Last updated: today

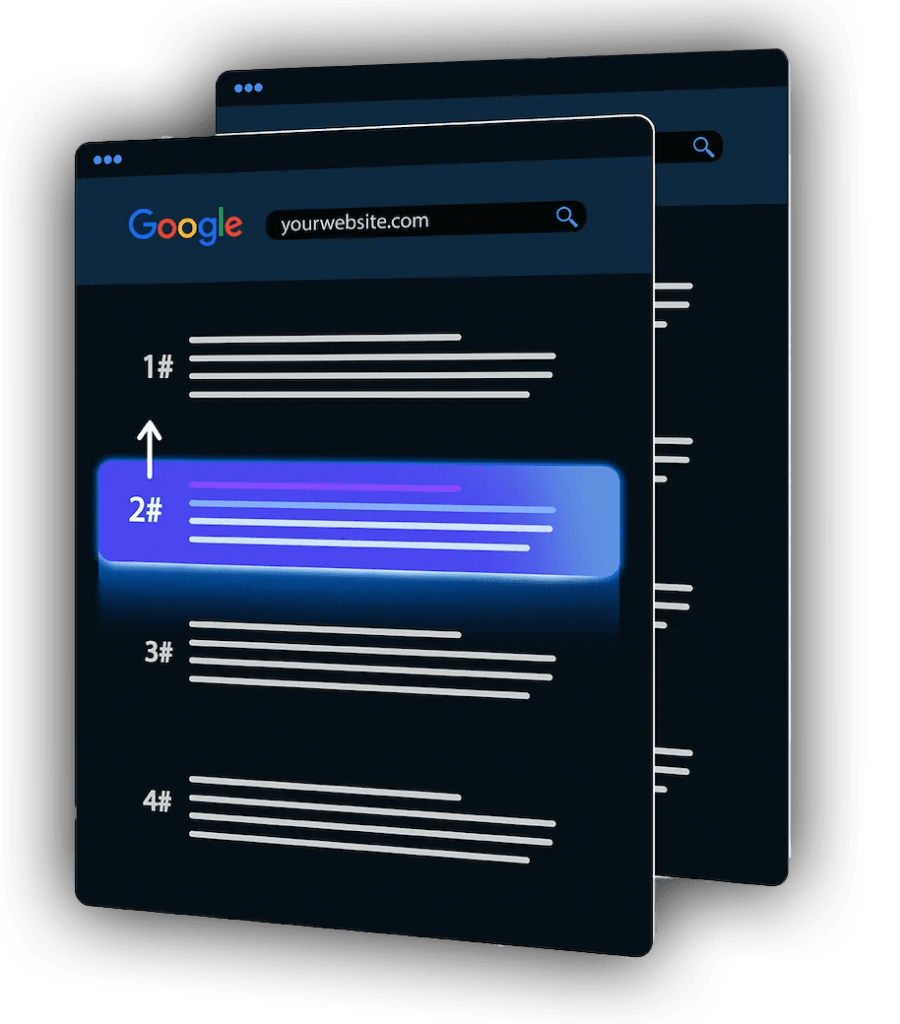

You have a new online store, offer a service or are working on your local business - but sales just aren't increasing? Despite a lot of effort, the traffic you hoped for is not materializing. Have you ever thought about SEO? While many still underestimate search engine optimization, you can leave your competition behind with our help. With our tried and tested strategy, we can ensure that your website ranks for the right keywords and dominates the top search results. And let's be honest - who scrolls down any further?

... in the Google rankings. Why is this important? Because it makes you much more visible online, attracts more customers to your site and thus increases traffic and sales. Search engine optimization can do just that. And we at WOLF OF SEO take the right measures to ensure that your website gets off to a rocket start in the rankings!

We work according to our proven 3-phase SEO strategy with the aim of building a sustainable, six-figure sales channel for you. From the analysis phase to the content phase to the biggest SEO leverage: we accompany and support you - all the way, over several months. Why months? Because organic traffic takes time. If you want to see measurable results on day 1, you need to place ads. But if you're looking for long-term rather than short-term success, our SEO professionals are the right choice for you.

A complete data basis is the most important basis for any decision.

In our analysis phase, we unravel every SEO problem, analyze the market down to the smallest search query and turn every one of your competitors into a glass human being.

Every page that should rank on Google needs an SEO text.

Here, we work according to a data-driven process, with thematically selected copywriters to get your brand worthy rankings.

Backlinks - The strongest SEO factor for years. They are the ace up your sleeve to overtake even the market leaders.

We make sure that online magazines & bloggers report positively about your brand & improve your rankings with keyword-optimized backlinks.

Why you should definitely tackle SEO as soon as possible with us as SEO agency on your side? Because search engine optimization can open up a completely new sales channel for you. Up to now, your store may have primarily been frequented by loyal existing customers who, fortunately, just keep coming back. Through a few recommendations you get new customers sporadically. But we as an experienced SEO agency know: There is wasted potential. Your products or services definitely deserve more visibility. And with SEO we get the full potential out of your business.

Through search engine optimization you get organic traffic that you did not have before. In our SEO agency, we find keywords tailored to your target group and create relevant content. These and other measures ensure that new customers find you - via Google search. This can be an essential factor for the success of online business, because: Every day, around 3.5 billion search queries are made via Google. And soon you could be at the top of some searches instead of on page 3.

Short and sweet: Our SEO specialists increase the visibility of your website. Better rankings increase traffic, more users find your site and your sales increase accordingly.

Test your SEO revenue potential now with our calculator ...

... Find out whether SEO support is worthwhile for your website!

If you haven't heard of SEO yet, ads might tell you something. Many companies place online advertisements to bring in more traffic. The principle is usually pay-per-click. A monthly budget is set, based on which the ads are placed. They appear at the top of Google's search results, marked with the word "Ad".

If we optimize your site with regard to SEO aspects, you can be listed directly under the ads - without a budget or payment per click. You are only one initial consultation away from a professional SEO strategy. As your agency, we take time for your business and create a strategy with the right measures after a detailed SEO analysis. What are they? That depends entirely on the current state of your website. Your industry is also important.

References

Search engine optimization simply refers to the optimization of your website so that Google can rank it in the best possible way. Imagine that:

Google has a little robot called a crawler. It scours the countless pages on the World Wide Web for relevant content for users. If it finds online stores and websites that could match certain search queries, it indexes them on the list of search results. This is how Google knows:

"Aha - that could be relevant for users with this interest."

But what if it finds nothing? Well - then it can't index anything in a targeted way. Your website is classified as less relevant for users ... and disappears at the bottom of the search results.

With strategic search engine optimization (onpage and offpage), we as your SEO advertising agency get the full potential out of your website:

We optimize your page with regard to all SEO parameters, so that the small crawler robot can immediately see for which search queries your page is relevant. As a result, it will be displayed more frequently to users in searches.

But not only the frequency is important here, but also the quality. So that you are not only displayed on page 4 of the search results, we ensure during the SEO support that you rank better than your competitors. So Google and the crawler should think:

"If a user is looking for this - then he is 100 % satisfied with your site and the search intention has definitely been fulfilled."

References

Because as an agency, we are your external SEO team and you don't have to hire extra staff. Imagine if your marketing team had to get to grips with technical SEO, onpage and offpage optimization, etc. ... far too complicated! Of course, you could do quite superficial SEO, but we know from our own experience:

You will not be able to sustainably improve your organic traffic in this way. For this you need continuous work and in-depth SEO knowledge. And that's what you get from us as an experienced SEO agency.

Our team is made up of SEO experts from various fields - from the perfect keywords to link building and content.

With our SEO work, we provide you with many benefits from which your business will also benefit in the long term:

Yours Rankings in the search results will improve through our SEO consulting. You can see our successes so far in the SEO Case Studies view. With clever measures we bring your website for more top rankings and keywords on page 1.

Yours Target group feels addressed. As professionals, we don't just do SEO in all directions, but effectively target your target group. We keep an eye on the buyer persona and customer journey both during keyword research and when creating SEO content. This ensures that exactly the right customers come to your store.

We underline your authenticity - with relevant backlinks on other websites. They refer users to you and show: This business has expertise. And not only potential customers find this appealing, but also the search engine.

Your website will be enhanced by creative, high-quality SEO content upgraded. Your customers have a lot of valuable information about products, categories, services or topics in the blog, which they deal with in everyday life. You show: I know what I am talking about. That creates trust. In addition, you put your business through good content in the right light. Of course, we produce in our SEO agency for you specifically optimized textswhich the crawler of the search engine takes into account.

→ A sustainable increase in traffic: Through our SEO strategy and the better rankings increase visibility and traffic on your website sustainably. Logical, because the higher your pages rank, the more often they are clicked.

→ Rising sales: If the better ranking leads more visitors to your site, then more sales can be generated there and thus a higher turnover.

→ In short: Through SEO you generate a new sales channel.

In our first conversation we want to understand your business and your goals to develop the perfect SEO strategy for you. At the end of the conversation we will give you an initial assessment of your web project and the necessary steps for future SEO success.

Our wolves are hungry ...

... after new projects. We are a young, professional team - always up to date. Instead of many different services, we have specialized in one: SEO. For this, we are as an SEO agency all around with heart and soul, the latest developments and updates of the search engines always on the radar. For more than 10 years we have been gaining SEO experience as an agency and have already managed various projects. Thus, we have know-how and practical skills for you up our sleeve, which make SEO even more effective.

... without compromise. In our SEO agency, you will have your own personal contact who will look after you from the very beginning of our collaboration. Nevertheless, you will receive the expertise of the entire WOLF OF SEO agency team - because only bundled skills can achieve long-term success in SEO optimization. We will keep you up to date with regular updates throughout the entire project. You can help shape every phase and we will adapt the SEO strategy optimally to your business and your wishes. Sounds good? Then book your no-obligation initial consultation - and we will provide you with initial information about the SEO potential of your website.

All beginnings are difficult, we know that. That's why we make it as easy as possible for you to get started in SEO: on the one hand, of course, with our comprehensive service, and on the other with a few practical gifts that you can also use for yourself outside of our collaboration (completely free of charge and without obligation, of course). First and foremost, our SEO check and the sales calculator. This allows you to see what is possible even before we start working together.

Let's start from the beginning: How well is your site already optimized? Perhaps you have already invested some time in the first steps for search engine optimization - in our SEO check we will show you what this has achieved. We analyze your online presence free of charge with regard to various parameters such as page structure, loading times, indexing, meta data, backlinks and usability. This small health check will show you how necessary further measures are for your SEO project. And then we come into play as an SEO agency: We help you to solve problems and build on your strengths.

After the SEO check, you may wonder whether the work of us as an SEO agency can be worthwhile for you at all. We ourselves are 100 % convinced of it - but you can check the potential of your website yourself with our sales calculator. There you can find out how SEO in Germany could affect your store or your online presence.

Would you like to find out more about SEO as an organic sales channel? Then simply book a non-binding initial consultation with our SEO agency! We will prepare ourselves intensively for the SEO consultation and show you what is possible with us on your side.

In addition, we can give you a first outlook on your potential success and explain which measures make the most sense for your industry and your store in terms of visibility.

We take care of your success. From the first top positions to €100,000 monthly turnover.

We take care of your success. From the first top positions up to 100.000€ monthly turnover.

What goals do you want to achieve through search engine optimization? As an SEO agency, we offer you our expertise right from the initial consultation and put our heart and soul into every project. We pursue various goals:

1. increase traffic and generate leadsThe turnover for your business is important - that's why we bring potential customers to your website through the appropriate SEO measures.

2. increase salesWe strengthen through keywords and content work not only the visibility of your homepage, but each individual category and if necessary even each product in your store. Thus, as an SEO agency, we give your website a pleasant competitive edge.

3. increase brand awarenessYou have founded your own brand - but nobody knows about it? Our agency provides targeted support for your business with SEO: We help you to reach your target group and make your brand better known through Google. Visibility is the be-all and end-all for online success.

4. leave competition behindHonestly - this goal is self-explanatory. With the right measures and us as SEO agency on your side, you can leave the still sleeping competition behind you ... and overtake them with your ranking.

A good SEO agency offers you an all-round service, from analysis to implementation. As SEO experts, we specialize in search engine optimization and can use SEO tools and other tools to boost your business. Whether you run an online store, own a local business or offer a service ...

➜ E-Commerce SEO: For online stores, visibility in search results is crucial because, along with social media, it is an important sales channel. If you don't want to invest money in ads or don't want to give way to the competition, you should do professional Search Engine Optimization. Our SEO agency will be happy to do this for you.

➜ Local SEO agency: You have a local location and sell products or offer a service there? Then don't just rely on word-of-mouth - increase your catchment radius and attract new customers through search engine optimization. We know what is important for Local SEO in Germany.

➜ SEO for large corporations: Also in B2B a good ranking can open another sales channel. Especially when expanding or building brand awareness, we are happy to help as an SEO online agency.

➜ Services: Do you offer your service online? With SEO we put it in the right light in the search results. No matter if it's pest control, consulting or similar - the ranking influences your traffic significantly.

That's why we are location-independent. Whether you are a self-employed person working from home or have a large company in Europe, we can work professionally for you as an SEO agency. Right from the start, we have adapted our workflows and SEO strategy to digital work so that we can work online with all SEO tools etc. without having to be on site.

This creates flexibility, because we don't have to meet "live" to work together. We work for your online business or your company on site, online. You can easily arrange meetings and updates with us from the comfort of your desk. Thanks to our tried-and-tested tool stack, we have exactly the right solution for all aspects and can work completely flexibly in terms of time.

Questions, cooperation or suggestions?

Then write us an email directly!

We are looking forward to your project or idea!

Sieh, wie echte Brands mit SEO Millionen umsetzen – jetzt Breakdowns sichern!

⚠️ Wichtig: Die Bestätigung zum Audit erhältst du per Telefon